The Panscales collaboration has just presented new simulation algorithms that are capable of describing with great accuracy the physics that occurs throughout the energy range of the Large Hadron Collider at CERN.

The Large Hadron Collider (LHC, CERN) studies a very large range of energy and distance scales. The fundamental physics probed at the LHC reaches energy scales well beyond the Tera-electronvolt (corresponding to distances below 2 10-19m). At these energies, the LHC is exploring so-far uncharted territory. Particles observed in detectors can instead have energies that are several orders of magnitude lower.

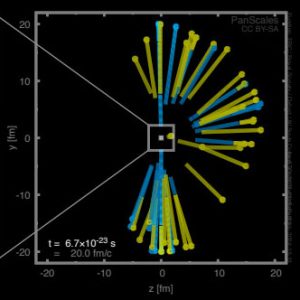

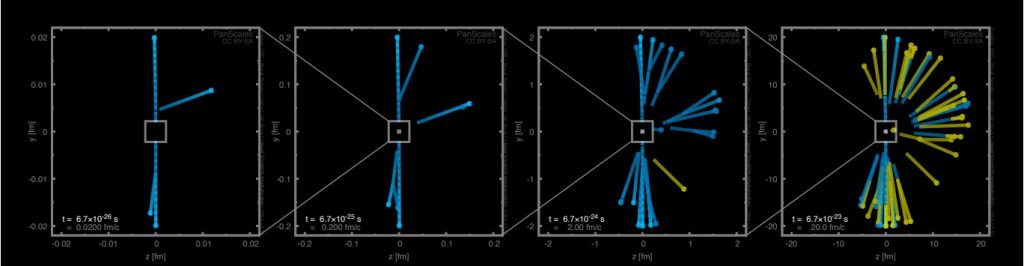

The PanScales project [1] is developing several new conceptual advances to better bridge this large energy span. The underlying methodology involves simulating [2] the successive branching of particles known as quarks and gluon (the fundamental particles of strong interactions), as illustrated in the picture below. The novelty of the PanScales approach lies in the deep connection that it maintains with the underlying theory of the strong interaction.

Simulations like those developed in PanScales are used in almost every publication in high-energy collider physics. Their first incarnation dates back to the late 1970s. The main reason behind their success is their flexibility: simulated events can be used to perform the same range of analyses/studies that one would do with real data. Specific examples include testing new theoretical ideas, training Artificial Intelligence Neural Networks, or assessing uncertainties in experimental measurements.

In order to be as trustworthy as possible, it is important that the algorithms used in the simulation encode the correct theoretical description of fundamental interactions. This is where the PanScales project thrives. Whereas earlier simulation tools were limited in accuracy, the new algorithms developed by the PanScales are able to describe the physics happening over the large LHC energy span with a high accuracy. Previously, high accuracy was possible only in analytic calculations which focused on a single property of the event at a time, for example, the average number of particles in an event.

The figure avove shows an example of how the latest PanScales developments (NNLL [3]) yield a much-improved description of collider data relative to the earlier state of the art (NLL), developed over the past years by several groups worldwide.

These latest developments are expected to have a major impact on the future of the LHC program and possible subsequent colliders. They will help provide crucial training data for Machine Learning. They will also help the experiments reach high precision across a wide range of measurements, including exploration of the novel properties of the Higgs field and searches for potential new physics at higher energies.

At the IPhT, PanScales is led by Gregory Soyez, together with former postdoc Alba Soto Ontoso and new CNRS recruit Silvia Ferrario-Ravasio. Other collaborating Institutes are Oxford, University College London, Manchester, CERN, Nikhef, Granada and Monash.

References:

[1] PanScales, https://gsalam.web.cern.ch/panscales/

[2] M.van Beekveld, M.Dasgupta, B.El-Menoufi, S.Ferrario-Ravasio, K.Hamilton, J.Helliwell, A.Karlberg, R.Medves, P.Monni, G.Salam, L.Scyboz, A.Soto-Ontoso, G. Soyez(IPhT), R.Verheyen, SciPost Phys.Codeb. 2024 (2024) 31 [arXiv:2312.13275]. See also https://gitlab.com/panscales/panscales-0.X

[3] A new standard for the logarithmic accuracy of parton showers, M.van Beekveld, M.Dasgupta, B.El-Menoufi, S.Ferrario-Ravasio, K.Hamilton, J.Helliwell, A.Karlberg, P.Monni, G.Salam, L.Scyboz, A.Soto-Ontoso, G.Soyez(IPhT), arXiv:2406.02661, accepted in PRL.